- How to install pyspark in python how to#

- How to install pyspark in python code#

- How to install pyspark in python download#

How to install pyspark in python download#

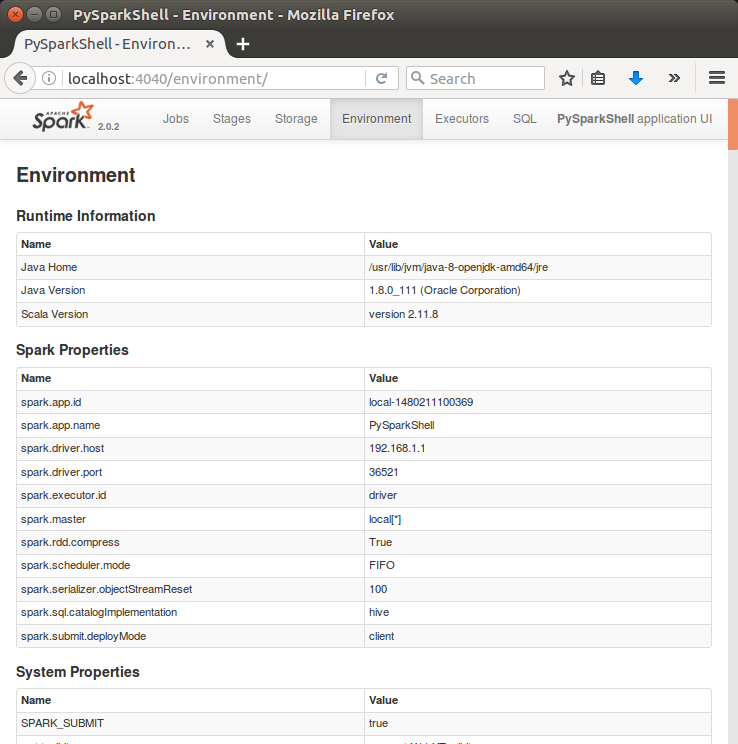

Spark downloads page, keep the default options in steps 1 to 3, and download a zipped version (.tgz file) of Spark from the link in step 4. Spark requires Java 7+, which you can download from Oracle’s website: Python 2.6 or higher (we prefer to use Python 3.4+) Some familarity with the command line will be necessary to complete the installation.Īt a high level, these are the steps to install PySpark and integrate it with Jupyter notebook:

How to install pyspark in python how to#

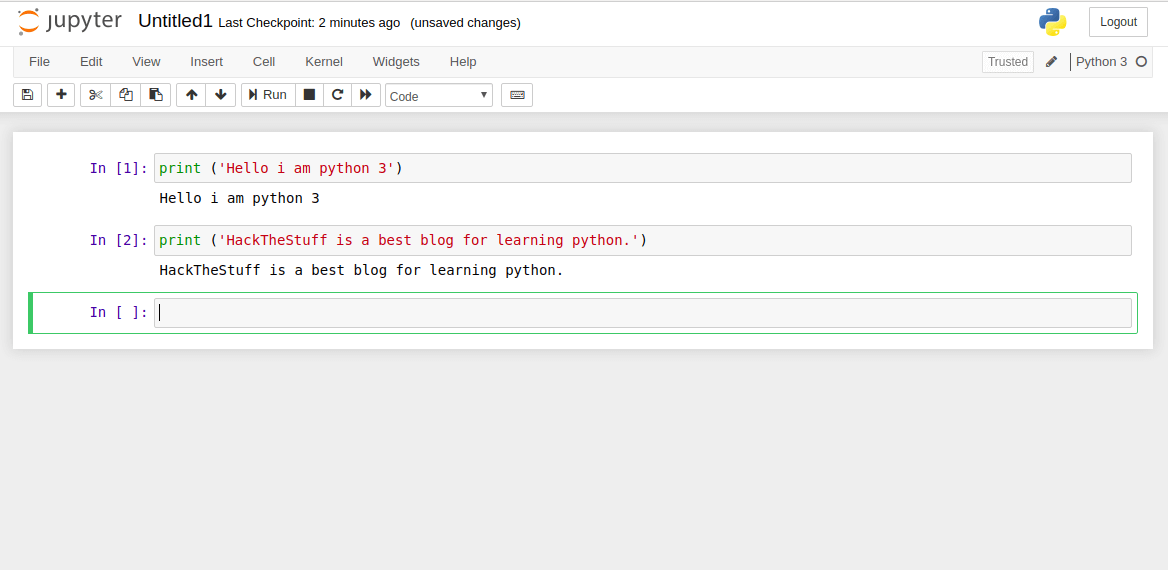

In this post, we’ll dive into how to install PySpark locally on your own computer and how to integrate it into the Jupyter Notebbok workflow. We explore the fundamentals of Map-Reduce and how to utilize PySpark to clean, transform, and munge data. Now for the moment of truth! a.At Dataquest, we’ve released an interactive course on Spark, with a focus on PySpark. Type source ~/.bashrc so Bash can re-read your. bashrc file and go back to your terminal.

Make sure where it says “richarda” for SPARK_HOME is replaced with your corresponding user name. bashrc file below: source /etc/environment export SPARK_HOME=/home/richarda/spark-3.2.1-bin-hadoop3.2 export PATH=$PATH:$SPARK_HOME/bin export PYSPARK_PYTHON=/usr/local/bin/python3.7 export PYSPARK_DRIVER_PYTHON=/usr/local/bin/python3.7 #when running spark locally, it uses 2 cores, hence local export PYSPARK_SUBMIT_ARGS="-master local pyspark-shell" export PYTHONPATH=$SPARK_HOME/python:$PYTHONPATH export PATH=$PATH:$JAVA_HOME/jre/bin Enter the environment paths at the end of your. Setup your Spark/Pyspark environment variables: - Type sudo nano ~/.bashrc in your terminal. tgz file from the downloads directory to an easily accessible directory of your preference, for me it’s my home directory.Ĥ. If you have java installed, check java version: java -version or java -versionģ. Java JDK: Installing and Setting up Java-jdk: a.Java-Jdk: To run Pyspark, you’ll need Java 8 or a later version.Īpache Spark: Since Pyspark is an Api layer that sits on top of Apache Spark, you’ll definitely need to download it.Įnvironment Variables: Are important because it lets Spark know where the required files are. Pyspark Dependencies: Python: install the version of the python that corresponds with whatever version of Pyspark you’re installing. The difference between the fourth sudo apt-get purge spark and fifth sudo apt-get purge -auto-remove spark is that the fourth command just removes configuration and dependent packages, while the fifth command removes everything regarding the Spark package. Next we uninstall Spark and we need to make sure it and all it’s dependencies, configurations, are completely removed from the system using the last three commands above. → pip uninstall pyspark → pip uninstall findspark → sudo apt-get remove -auto remove spark → Optional(can do either/or) → sudo apt-get purge → sudo apt-get purge -auto-remove sparkįirst we uninstall Pyspark and Findspark. You can skip this step if you never installed Spark or Pyspark on your machine. Go to your terminal and run these commands. Delete Pyspark and all related packages.ĭelete Pyspark, Spark Related Packages: Before starting this I made sure to delete all traces of Pyspark and Spark from my machine, so I can start fresh.I thought while I try to figure out this conundrum, it would be best to document that process here.

How to install pyspark in python code#

I’ve tried looking up as many tutorials as I could but it all resulted in Pyspark is not defined in VS Code after trying to import it. So the past few days I’ve had issues trying to install PySpark on my computer.

0 kommentar(er)

0 kommentar(er)